Perception and Navigation

At the Robotic Systems Lab, we have extraordinary robots that can locomote in challenging environments. However, to be able to carry out autonomous missions, a high-level understanding of the environment and reliable pose and state estimation are required. These perception and navigation solutions are crucial for enabling the robots to operate effectively in complex and dynamic environments. In our group, the core aspects we focus on are:

Navigation

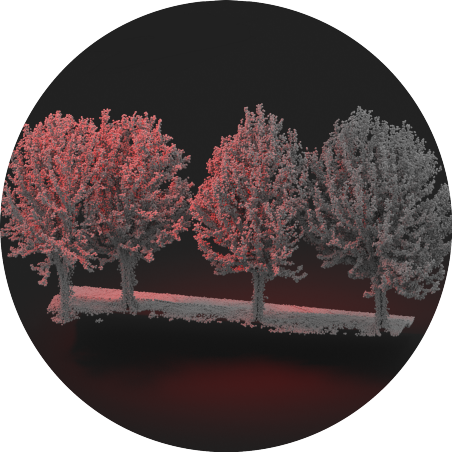

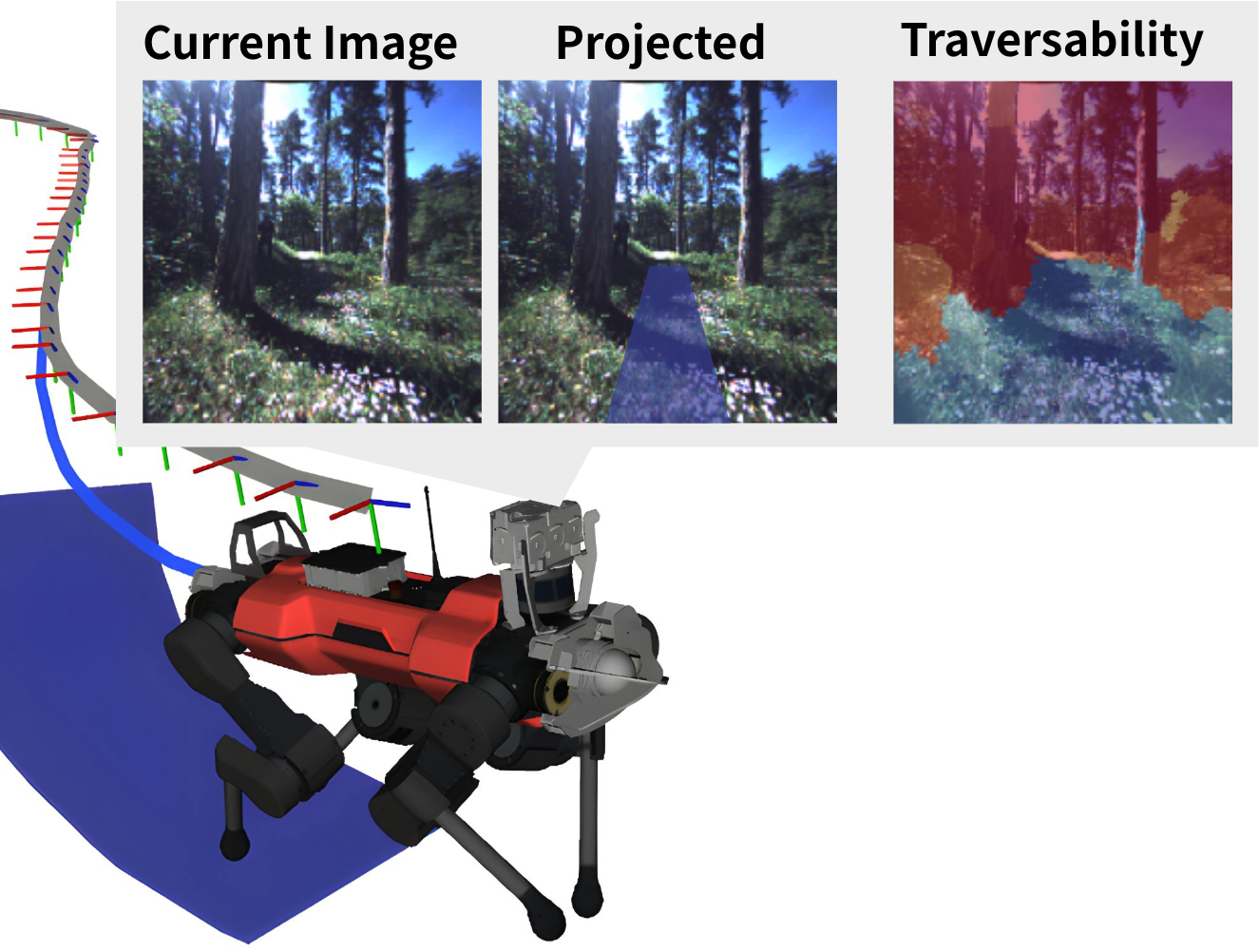

Legged robots possess remarkable agility, making them ideal for tackling challenging outdoor environments. These environments often feature intricate geometries, non-rigid obstacles like bushes and grass, and demanding terrain like muddy or snowy surfaces. Achieving full autonomy in these settings necessitates the development of navigation algorithms that take into account terrain geometry, properties, and semantics. Our research is dedicated to addressing all aspects of navigation, encompassing both local and global path planning, as well as traversability analysis. Our planning methodologies employ sampling-based and end-to-end trained methods as well as reinforcement learning agents to ensure safe navigation through these complex terrains.

Simultaneous Localization and Mapping

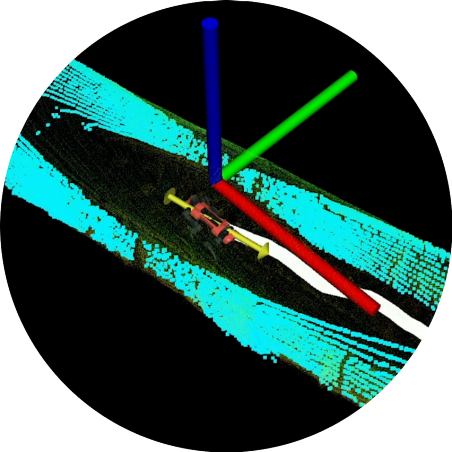

Visual, IMU and LiDAR-based simultaneous localization and mapping (SLAM) are core components in legged robotics to navigate and plan autonomously in a complex environment. Our group focuses on SLAM in challenging and feature-deprived environments to push the limits of state-of-the-art methods. Identifying and mitigating localization degeneracy in such environments is crucial to keep the environment representation (point-cloud map) clear and intact. In addition, the quality and smoothness of the localization result is a crucial component in the success of downstream tasks such as terrain traversability estimation.

For most tasks of autonomous robots and especially SLAM, reliable and fast state and motion estimation updates are critical building blocks. To achieve reliable estimations, our methods are required to handle different modalities and detect outliers and faulty measurements reliably. In mixed environments, i.e., partially indoors and partially outdoors and environments with mixed lighting conditions, all modalities should be used to estimate the current robot's state.

Scene Understanding

We believe that a proper and functioning scene understanding is a crucial part of the next level of autonomy. In our research, we investigate how to fine-tune large pre-trained models, and how to use them best for downstream tasks such as navigation and localization.

Sensor Suite

At the heart of our robots' perception and navigation capabilities lies a sophisticated sensor suite. Our existing systems predominantly utilize RGB and Depth Cameras, LiDAR, and IMU technologies. However, addressing the inherent noise in these sensors, which can only be partially modeled in simulations, presents significant challenges in effectively working with these modalities.