SNF - data-driven control

Data-driven control approaches for advanced legged locomotion

Project identifier: SNF 166232

Project duration: 1.7.2016 - 30.6.2019

Project Researcher: Jemin Hwangbo, Jan Carius, Vassilios Tsounis, Yves Zimmermann

Legged locomotion requires dexterous control of contact forces for propulsion and keeping balance. A legged robot has to continuously decide where to place its feet and how much force to apply. In this project, we explored two complementary approaches to solving this locomotion problem without any pre-specified movement patterns or stability constraints.

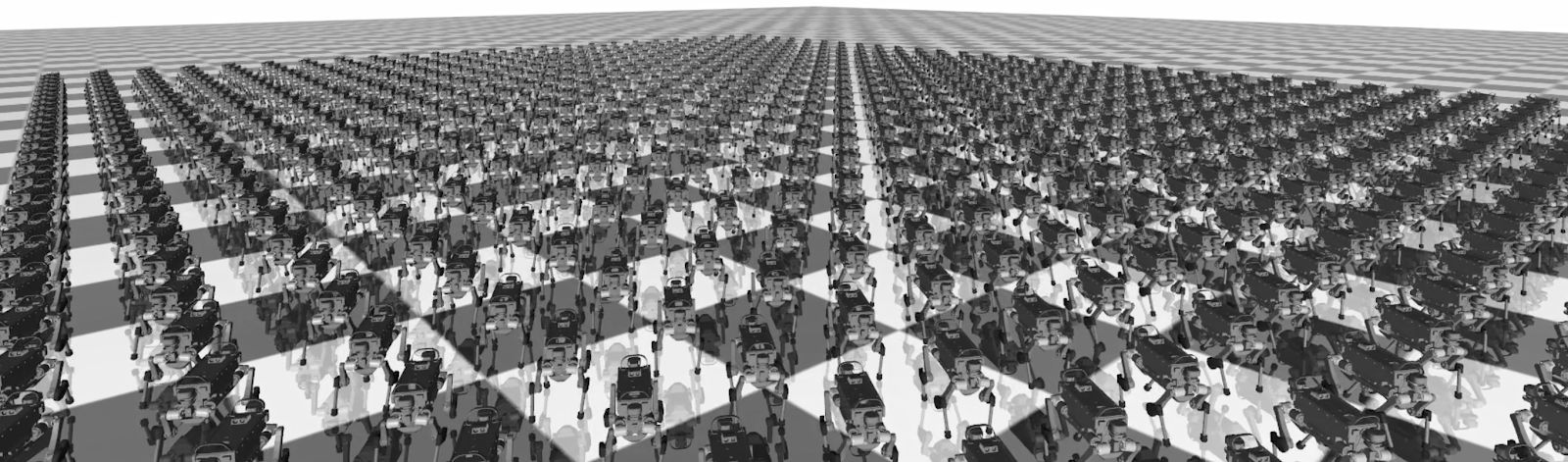

The first stream of research includes the development of reinforcement learning-based control strategies. In particular, we employed a physics engine as a primary tool for building control policies for the real robot. By training a policy in a simulated environment, we

eliminated the risk of damage to the real robot. However, this necessitates an accurate and fast physics engine. A severe discrepancy between the simulated system and the real robot can lead to a failure in the transfer.

Our work on contact dynamics [2] addressed this issue. We exploited a rigid, piece-wise-convex contact model to accurately capture the dynamics of contacts made by robotic systems. The resulting contact problem is solved by a new efficient nonlinear optimizer, which exploits the structure of the problem for fast and stable convergence. Integrating such a solver to a custom simulation framework resulted in an extraordinary performance: it could simulate an 18-dof leg system 1000 times faster than real time on a single CPU thread. The accuracy of the simulator was verified experimentally for the system of interest and we concluded that it is adequate for policy training. A detailed performance evaluation of this simulator is provided in external page https://leggedrobotics.github.io/SimBenchmark/ .

There has been a large request by other research labs and companies to use this tool. We are considering right now to commercialize this technology and hence only released a closed-source version with time-limited license (external page https://github.com/leggedrobotics/raisimLib).

We first used the developed simulator to train control policies for a quadrotor system [1] as a pilot study. In this work, we also developed a new learning algorithm, which addresses the stability issue associated with deterministic policy gradient algorithms. We incorporated the idea of trust region optimization to the policy update and successfully trained a control policy that is robust enough on the real robot. It was able to recover from multiple hand launches, even when thrown in a nearly entirely upside-down configuration. The journal publication that came out of this work is still one of the most popular paper of all time in the IEEE Robotics

and Automation Letters.

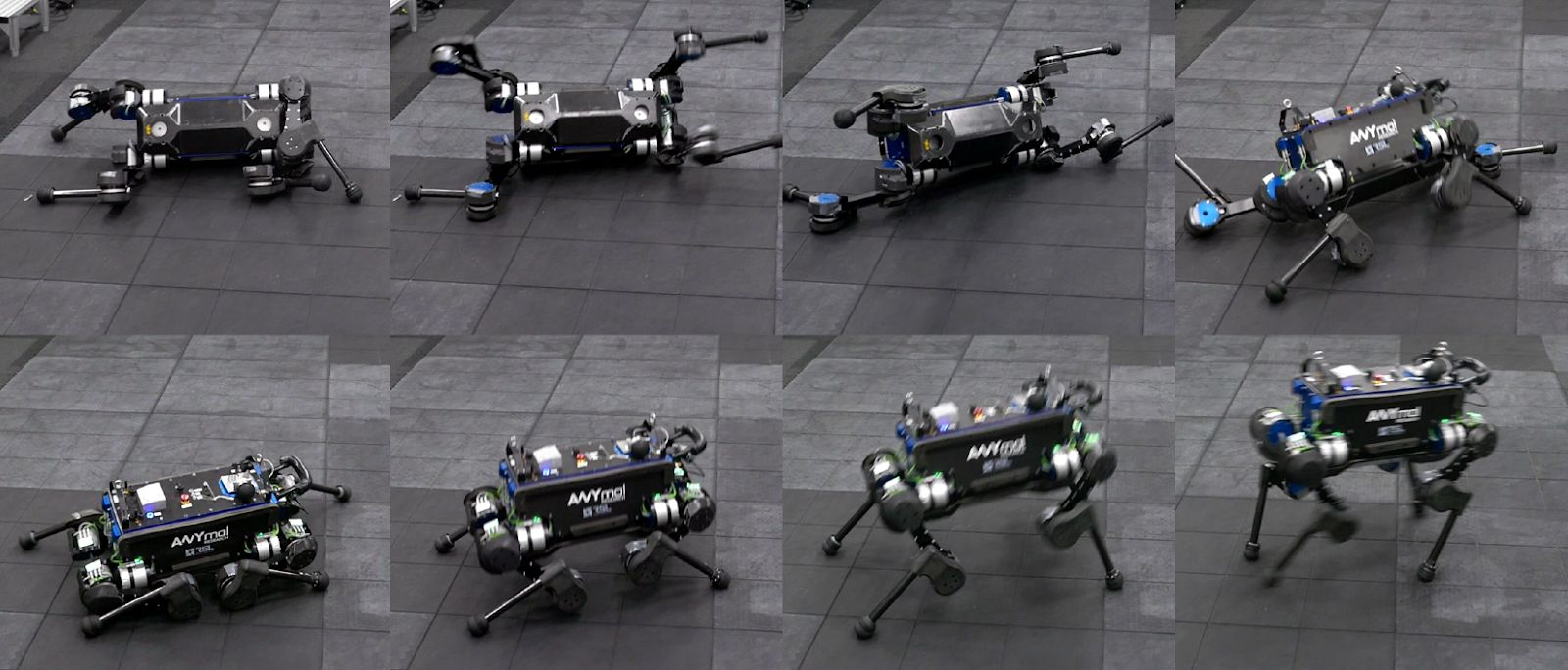

Applying the same method to a state-of-the-art legged robot was more challenging due to unmodelled actuator and software dynamics, which is not present in our physics engine. This discrepancy, which is commonly referred to as a reality gap, was prohibiting a successful transfer to the target system. Hence, we developed a new method whereby the rigid-body dynamics [2] can be augmented with a data-driven model in the form of a trained neural network [5]. Using this method, the combined model successfully closed the reality gap: the policies trained using only simulated data performed remarkably well on the real system. We evaluated the trained policies in two tasks: locomotion and self-righting tasks. The policy trained for the former task could follow the commanded velocity more precisely

while consuming less power, less computational cost and less torque than the best controller running on the same system did. Regarding the self-righting task, the trained policy could achieve agile recovery motions in a situation involving multiple arbitrary contact points, which was far beyond the capabilities of the previous approaches. These results successfully proved that the proposed learning-based control approach is a promising option for controlling complex robotic systems. The work was successfully published in Science Robotics and created a large impact in the legged robotics community. Several prominent news reported about the novel method ( https://rsl.ethz.ch/publications-sources/Media.html).

In the second line of work, we investigated the possibility of using an accurate multi-body model together with the assumption of hard ground contact to optimize walking motions from scratch. To avoid any ungrounded assumptions on the movement patterns (e.g., the gait), we modeled the effects of ground contacts as part of our system dynamics. In this way, the optimization algorithm only sees the combined robot-ground behavior and optimizes the control commands in light of the robot’s interaction with the environment. Therefore, we can avoid defining a predefined contact sequence and let the optimizer automatically come up

with an appropriate plan.

The availability of a model allows us to calculate gradients of the system's state with respect to the control inputs. We can, therefore, infer accurately which change in control inputs are necessary to reach a desired state. This is much richer information than just a merit score of the current input sequence and will lead to a significantly fewer number of required samples. Hence, instead of trial-and-error evaluations of our policy, the data extracted from one iteration of our algorithm additionally includes the direction in which we should improve. It typically takes less than 100 optimizer iterations until convergence.

In our initial study [3], we reported on how a solver for rigid contacts can be combined with the dynamics of a multi-body system. This joint model of the robot and the environment was implemented in a way that allows taking derivatives of the cost function with respect to the robot’s state and control input. We then used a state-of-the-art optimization algorithm to iteratively improve the sequence of control inputs with respect to a cost function. We showed that the combination of an established optimal control algorithm and a model of the system dynamics that includes the effect of contacts leads to an algorithm that automatically

discovers a multi-phase motion with both contact and flight phases. This result was exemplarily shown on a single-legged hopping robot.

In the follow-up project [4], we extended these ideas to a quadrupedal robot. We showed that after some modifications and improvements, the same algorithm can find a walking motion from scratch, i.e., without a predefined gait pattern. The choice of a single-shooting optimization algorithm guarantees that the optimized trajectories remain feasible with respect to the system model. Our system model proved accurate enough such that more than a dozen footsteps could be stably tracked on the robot without replanning.

Interestingly, our contact model also allowed the optimizer to discover and make active use of slipping contacts. Such behavior is typically avoided (if not impossible) in standard motion optimization algorithms because contact interactions are strictly modeled as no-slip conditions. Sliding contacts may, however, become useful when the degrees of freedom of the system are not sufficient to complete a given task or when walking on very slippery surfaces.

Finally, this model-based method also opens the door to online replanning in light of new information (e.g., robot state, environmental conditions). The ability of predicting the future with a dynamics model is a powerful tool for crafting robust and adaptive controllers. Initial experiments showed that receding horizon control is capable of stabilizing the system by

taking additional footsteps to regain balance.

In our latest submissions, we combined model-based control with the learning pipeline. In [7], we use MPC as an expert for policy training, in the very promising work [8] we use an optimization-based transition feasibility criteria to learn a gait planner and gait controller network.

In this project, we have investigated two promising directions of legged robotic control: a simulation-based learning approach and a model-based optimization approach. In both directions, we obtained performant controllers for the real robot. The two directions share a lot of common ground and the combination of both results in very powerful and complete method. We are convinced that our proposed methods can be employed to overcome many of the challenges remaining in legged robotics.

Individual Contribution to PhD

Dr. Jemin Hwangbo graduated in the course of the SNF project with the thesis entitled “Simulation to Real World: Learn to Control Legged Robots”. The learning papers listed in the Research Output corresponds to a large fraction of this excellent PhD thesis. The

student has now offers for a number of faculty positions and he will start as independent researcher this year.

Mr. Jan Carius is planned to graduate in Summer 2020. The papers published in the course of the SNF project (and a number of papers that are in preparation/submitted) form a large fraction of his PhD thesis.

Mr. Vassilios Tsounis developed in the course of this SNF project an entirely novel learning pipeline for deployment on the real system. This massive investment is the basis for a number of publications in preparation. Paper [8] is a core contribution of his thesis.

Research Output

[1] J. Hwangbo, S. Inkyu, R. Siegwart and M. Hutter "Control of a quadrotor with reinforcement learning." in IEEE Robotics and Automation Letters, vol.2, no. 4, pp. 2096-2103, Jun. 2017

[2] J. Hwangbo, J. Lee and M. Hutter. "Per-contact iteration method for solving contact dynamics." in IEEE Robotics and Automation Letters, vol. 3, no. 2, pp. 895-902, 2018

[3] J. Carius, R. Ranftl, V. Koltun and M. Hutter, "Trajectory Optimization With Implicit Hard Contacts," in IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 3316-3323, Oct. 2018.

[4] J. Carius, R. Ranftl, V. Koltun and M. Hutter, "Trajectory Optimization for Legged Robots With Slipping Motions," IEEE Robotics and Automation Letters (accepted), 2019.

[5] J. Hwangbo, J. Lee, A. Dosovitskiy., D. Bellicoso, V. Tsounis, V. Koltu and M. Hutter, "Learning agile and dynamic motor skills for legged robots." Science Robotics, vol. 4, no. 26, eaau5872, Jan. 2019

[6] Lee, Joonho, Jemin Hwangbo, and Marco Hutter. "Robust Recovery Controller for a Quadrupedal Robot using Deep Reinforcement Learning." arXiv preprint

arXiv:1901.07517 (2019).

[7] J. Carius, F. Farshidian, and M. Hutter, “MPC-Net: A First Principles Guided Policy Search Approach,” (submitted to) IEEE Robot Autom. Lett., 2019.

[8] V. Tsounis, M. Alge, J. Lee, F. Farshidian, and M. Hutter, “DeepGait: Planning and Control of Quadrupedal Gaits usingDeep Reinforcement Learning,” (submitted to) IEEE Robot. Autom. Lett., 2019.